Application development teams use AI-based code-generation tools to create faster, bug-free programs. AI coding tools can deliver up to 45% quicker results, outperforming human programmers by 10x. Undeniably, AI for coding is the hottest trend in 2024. However, AI code-generation tools pose serious security threats to IT and security teams. AppSec analysts see AI-based code-generation tools as a trojan horse. According to the latest study, 15% of leaders stated they have banned AI’s use for generating codes. Despite documented prohibitions, 99% of security professionals see AI’s use for developing new codes.

Leading cloud-native AppSec testing platform Checkmarx published a report, highlighting a seven-step roadmap for safely integrating generative AI into application security practices. The study, a deep dive into the complex relationship between development and security teams, reveals a critical tension: the need to harness AI’s productivity benefits while mitigating emerging risks.

The report underscores the widespread adoption of AI tools among development teams, with a staggering 99 percent of respondents stating an uncontrolled use of AI for code generation. However, a parallel concern is evident, with 80 percent expressing apprehension about potential security threats.

Checkmarx’s comprehensive guide offers CIOs and CISOs practical steps to navigate this challenge, empowering organizations to unlock the full potential of AI while safeguarding their applications.

In this article, we have distilled the salient takeaways from the report on the enterprise application of AI-based code-generation tools and the security threats.

#1 AI Purchasing Decisions in Software Development Remains Uncertain

Checkmarx’s AppSec testing report found more than one-fourth (26%) of AppSec managers and CISOs have an AI investment plan for their organization. Most decision-makers have purchased an AI tool or plan to do so in the next 24 months. Surprisingly, 70% of respondents agreed that their organization allows different groups to buy as many AI tools as possible on a case-by-case basis. While there is obvious excitement about the possibilities of using AI for code generation and testing among AppSec managers, uncertainty looms large over the outcomes. AI’s rampant use in application development means AppSec managers have yet another tool to manage, monitor, and govern as part of their compliance and data privacy policies.

#2 No Rush to Adopt Gen AI Tools

Since the launch of ChatGPT, followed by the introduction of potent GenAI, Co-Pilots, and LLMs, the AppSec industry is not rushing with AI buying initiatives. AppSec managers and CISOs are slowly adopting GenAI tools for their organizations. Significant efforts are made to investigate the use of AI at various stages of testing, experimentation, and deployment.

While generative AI is reshaping the software development landscape, it poses unprecedented security challenges to enterprise app development teams. App developers embracing AI tools to accelerate coding risk by introducing newer types of vulnerabilities in their existing workflows, most of which remain undetected until a disaster strikes.

Checkmarx’s recent report underscores this dichotomy.

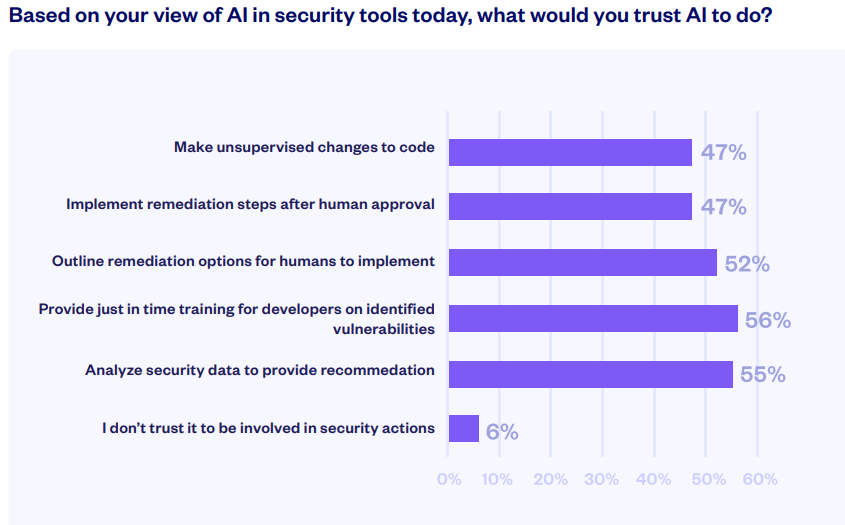

A staggering 47% of respondents admitted allowing AI to make unsupervised code changes, highlighting the enthusiasm for AI-driven development. However, surprisingly, 6% of CISOs also refrain from trusting AI’s ability to safeguard code. This stark contrast reveals a critical gap in the industry’s approach to AI and security.

The report further emphasizes the need for robust security measures to counteract the risks associated with AI-generated code. Security teams are inundated with a flood of new code to analyze, necessitating advanced tools and processes to manage and prioritize vulnerabilities effectively.

To address this challenge, organizations must adopt a holistic approach that combines AI-powered development with stringent security measures. By striking the right balance, businesses can harness the benefits of AI while mitigating potential risks.

Checkmarx’s report found only 2% of respondents purchased a GenAI solution for their operations; 29% are in the testing and experimentation stages, with no plans to invest in AI tools yet. 16% of respondents plan to purchase a GenAI tool in the next 12 months; while 42% might consider investigating the adoption of GenAI tools in the distant future.

The report highlights how easy and intuitive it is to adopt AI for development. While businesses could implement AI-generated code development for quicker turnarounds, the report outlines the risks that could pave the way for new attack vectors.

So, governance gaps leave a lot of room to doubt current practices.

#3 Authorized AI Use versus AppSec Risks

Checkmarx has highlighted only 14% of survey respondents have an “official” AI tool for code generation. This tool is authorized for use but lacks strong governance and risk assessment frameworks to thwart cybersecurity challenges. This leads to a widening governance gap among application DevOps teams. The report cites 92 percent of respondents have AppSec-related concerns, emerging from the deployment of AI-based code generation tools.

The common AI-related AppSec related risks mentioned in the report are:

AI hallucinations:

These originate from false data points or patterns, which could be exploited by malicious actors.

Prompt injections:

It relates to tricking the system by “injecting” malicious prompts to trigger undesired actions, behaviors, or outcomes using manipulated AI models.

Secrets leakage:

Companies could lose intellectual property due to accidental leaks, perpetrated by GenAI tools used to create custom codes. These codes could be used outside the organization if the developers don’t restrict access or modify the code for future use.

Likewise, GenAI tools could become the launchpad for data leaks, posing significant risks to user data lakes, systems, and others.

GenAI-powered insecure coding:

GenAI tools lack the training to secure coding processes. Insecure codes result from bad coding practices, delivered through insecure libraries, packages, and patches.

License risks:

Open source license ‘tainting’ could lead to IP breaches. GenAI may lack the credibility and authority to use data. Application developers using AI for generating code may lead to doubts about the company’s right to use and execute the code for business operations, resulting in possible license infringements.

#4 AI Benefits for Security Teams

For every 150 developers, there is only one AppSec manager. Ideally, GenAI for lean AppSec teams addresses skills and resource gaps in an organization. Technically, it is a massive undertaking to rely solely on human AppSec developers to identify and remediate a vulnerability, once it’s detected. New-age techniques such as Codebashing are useful in such scenarios, as reported by Checkmarx. AI can play a decisive role in enhancing security posture. 31% of respondents feel AI can help security teams remediate existing vulnerabilities faster. (We are unsure of the effectiveness of this remediation though!)

32% of respondents cite AI’s use can fine-tune AppSec solutions for different applications. Only 6% of respondents don’t trust AI in security tools to take any actions on their behalf.

Contrastingly, 56 percent of AppSec managers and CISOs have a view of AI in security tools providing just-in-time training for developers on identified vulnerabilities; 52 percent use AI to outline remediation options for human AppSec managers to test and deploy.

GenAI for Application Security: How to Safely Take Advantage of the Technology?

Checkmarx’s latest report mentions GenAI’s popularity for application development among coders. The report optimistically portrays the AI capabilities as a means to automate and accelerate code generation, and to an extent also use the same technology to detect vulnerabilities. Likewise, it warns against AI risks, especially when malicious actors leverage GenAI to expose the existing security gaps. To thwart such attacks and plug security gaps, Checkmarx provided a seven-step guideline for CISOs.

Here’s a quick overview:

- Establish a standardized governance for GenAI adoption in the company context

- Map the risks AI and LLMs pose to the developer workflows

- Identify the known and unknown attack vectors and cyber threats

- Enable vendors and customers to perform security tasks faster with powerful GenAI experience management

- Boost developer and AppSec productivity with GenAI

- Assess the IT and application spread across the organization to identify how many teams and employees use GenAI tool (s)

- Evaluate AppSec vendors who can provide secured GenAI tools to developers

Conclusion

Checkmarx’s report cites the different scenarios in application development and security testing where GenAI can become a disruptor and a catalyst. However, it also raises a red flag about GenAI’s role as a threat actor, posing newer security risks to companies that use AI and LLMs for code generation.